Drivers misusing and abusing Tesla Autopilot system

- A study found Tesla drivers are distracted while using Autopilot

- Tesla’s Autopilot is a hands-on driver-assist system, not a hands-off system

- The study notes more robust safeguards are needed to prevent misuse

Driver-assist systems like Tesla Autopilot are meant to reduce the frequency of crashes, but drivers are more likely to become distracted as they get used to them, according to a new study published Tuesday by the Insurance Institute for Highway Safety (IIHS).

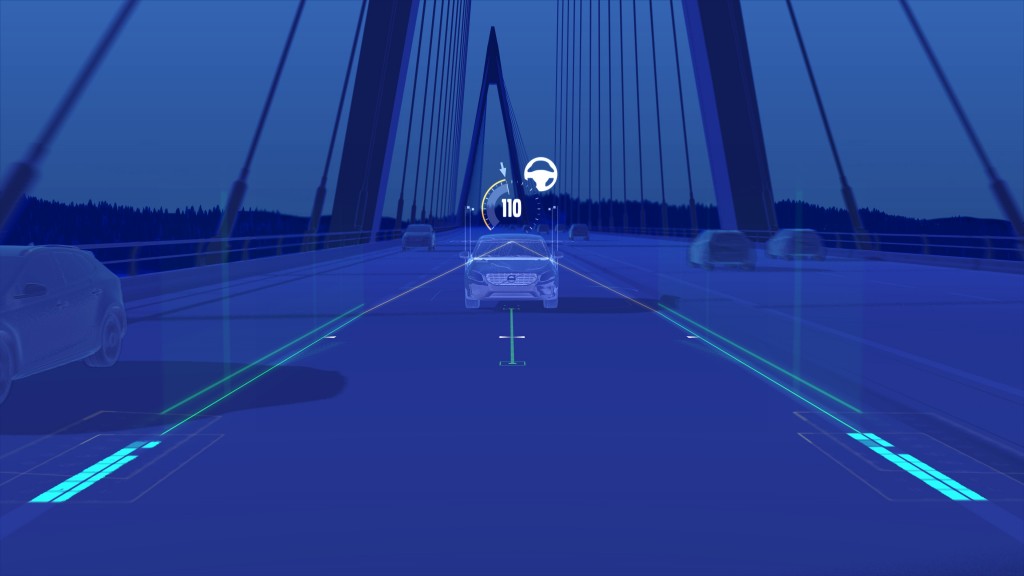

Autopilot, along with Volvo’s Pilot Assist system, were used in two separate studies by the IIHS and the Massachusetts Institute of Technology’s AgeLab. Both studies showed that drivers had a tendency to engage in distracting behaviors while still meeting the bare-minimum attention requirements of these systems, which the IIHS refers to as “partial automation” systems.

In one study, researchers analyzed how the driving behavior of 29 volunteers supplied with a Pilot Assist-equipped 2017 Volvo S90 changed over four weeks. Researchers focused on how likely volunteers were to engage in non-driving behaviors when using Pilot Assist on highways relative to unassisted highway driving.

Pilot Assist, in 2017 Volvo S90

Drivers were much more likely to “check their phones, eat a sandwich, or do other visual-manual activities” than when driving unassisted, the study found. That tendency generally increased over time as drivers got used to the systems, although both studies found that some drivers engaged in distracted driving from the outset.

The second study looked at the driving behavior of 14 volunteers driving a 2020 Tesla Model 3 equipped with Autopilot over the course of a month. For this study, researchers picked people who had never used Autopilot or an equivalent system, and focused on how often drivers triggered the system’s attention warnings.

Researchers found that the Autopilot newbies “quickly mastered the timing interval of its attention reminder feature so that they could prevent warnings from escalating into more serious interventions” such as emergency slowdowns or lockouts from the system.

2024 Tesla Model 3

“In both these studies, drivers adapted their behavior to engage in distracting activities,” IIHS President David Harvey said in a statement. “This demonstrates why partial automation systems need more robust safeguards to prevent misuse.”

The IIHS declared earlier this year, from a different data set, that assisted driving systems don’t increase safety, and it’s advocated for more in-car safety monitoring to prevent a net-negative affect on safety. In March 2024, it completed testing of 14 driver-assist systems across nine brands and found that most were too easy to misuse. Autopilot in particular was found to confuse drivers into thinking it was more capable than it really was.

Autopilot’s shortcomings have also drawn attention from U.S. safety regulators. In a 2023 recall Tesla restricted the behavior of its Full Self-Driving Beta system, which regulators called “an unreasonable risk to motor vehicle safety.” Tesla continues to use the misleading label Full Self-Driving despite the system offering no such capability.

Electric cars news.